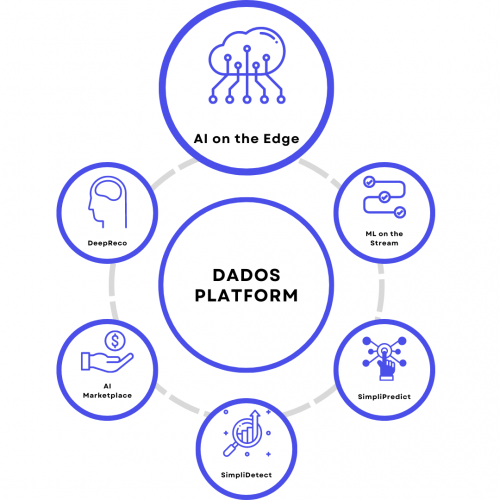

AI On The Edge

Run your models on the devices that are generating the data, saving time and money and improving security

On the Edge Computing

Companies are spending billions of dollars annually in computing and network costs to move raw data through their system. Get control of those costs by running models and analytics on the devices where the data is generated.

- Enables distributed machine learning across your system. The model is generated and run on edge devices, and the results are pushed up to the central hub. Each device is its own AI engine.

- Saves money on network and computing costs by eliminating the need for large datasets to be transferred across the system.

- Improves privacy and security since discrete data never leaves the device.

- Supported by 5 patents.